The first in a series of Algorithm Stories.

In this blog post, the first in a series, we would like to reinforce the idea that our architecture is code-driven. On our architecture overview page, we have a designed an animated sequence that abstracts and introduces the main features of our instruction set architecture. Let’s have a look at some of those features in more detail. Here we look at the animated sequence and address each part and see how we will control it with basic SDK API calls. Expect more from the series in the future. But first, we will start with a simple example to show the power of the Source Mode features of the quadric SDK.

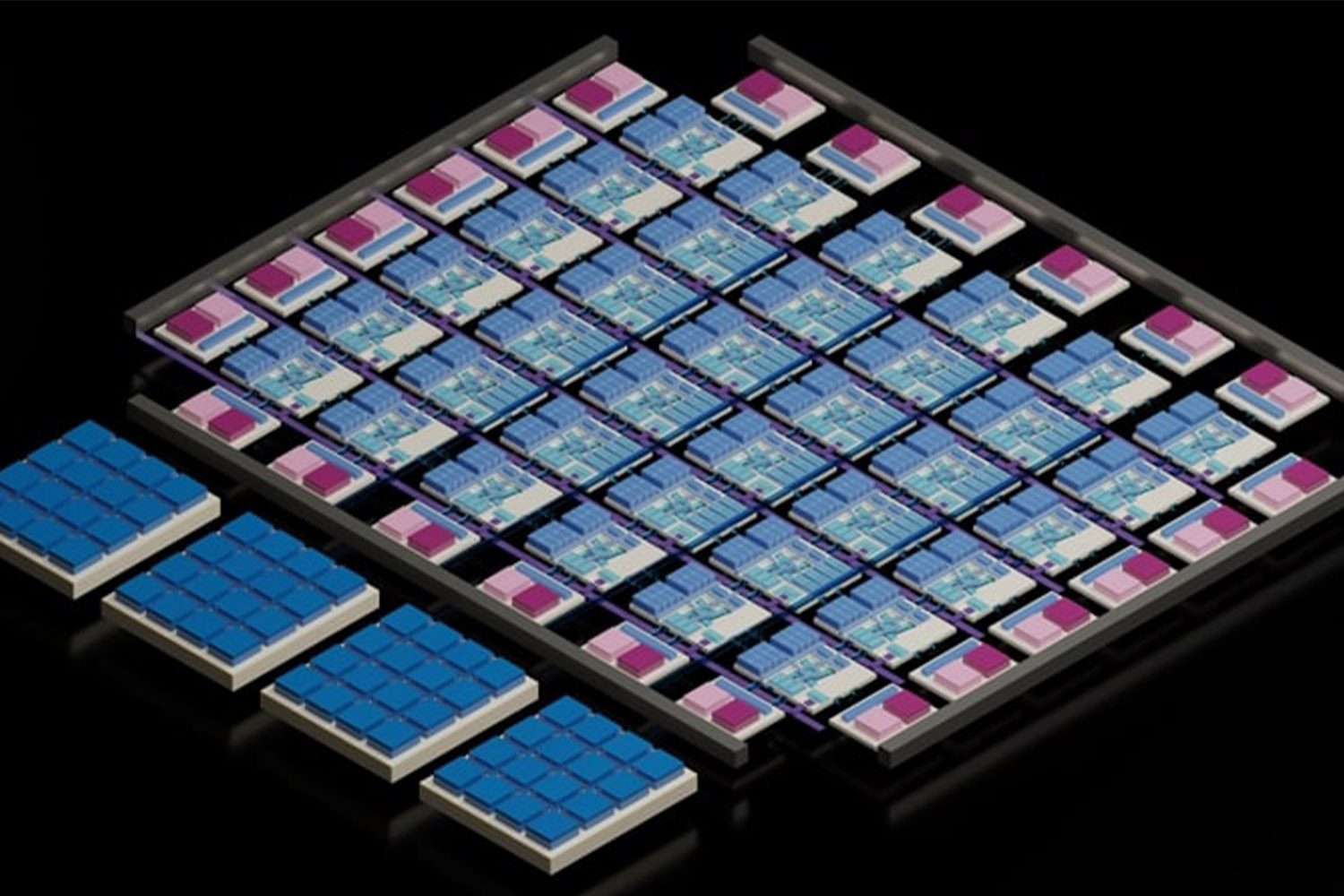

Large local memories offer the developer space for large data structures. Multiple ports allow for simultaneous reading and writing to keep the vortex core array busy.

The cores in the same architecture group get the same instruction. Let’s look at the following block of code:

#define NUM_TIMES = 10;

qVar_t <std::int32_t> qData[NUM_TIMES];

for(std::int32_t count = 0; count < NUM_TIMES; count++) {

qData[count] += 2 * count;

}In this block of code, qvar qData is being declared in each core at the same time. We define NUM_TIMES as 10, and the corresponding data structure inside of each Vortex Core is 10 entries deep. The compiler will allocate space for it in the local RF, and each Core’s address will be the same. For a 256 Vortex Core architecture like the one on the q16 Processor, this is 2560 total unique variables, 10 for each core. We compute the value of each entry of qData to 2 times the loop variable. 2 * count is happening 256 times, at the same time, for each loop iteration.

What if we want subgroups of cores to do slightly different things? We achieve this through an architectural feature called prediction. Predication allows us to use the dynamic runtime information from any variable present in the architecture to change the behavior of our program execution. This can also be used to implement compute-sparsity or early loop termination.

Continuing to build, we want even columns to do something slightly differently from odd columns. Instead of multiplying the iteration count by a constant, we now take information from our nearest neighboring Vortex Core. In the case of even columns, the result will be passed to the east. While in the case of the odd columns, the product will be given to the west.

We can do something simple: update the value of a variable by multiplying the value of a loop variable with a value from the physically neighboring Vortex Core. We receive this value from the East for even columns, and we pass our value to the West. For odd columns, we do the opposite. Each Vortex Core has knowledge of its physical placement. We conditionally branch based on whether the column is even or odd. Here is the resulting code:

#define NUM_TIMES = 10;

qVar_t<std::int32_t> qData[NUM_TIMES];

for(std::int32_t count = 0; count < NUM_TIMES; count++) {

qWest<> = qData[count];

qEast<> = qData[count];

if(qCol<> % 2 == 0) {

// the column of the core is even

qData[count] += qEast<> * count;

} else {

// the column of the core is odd

qData[count] += qWest<> * count;

}

}With this simple example, we see the power of predication against a single instruction for every Vortex Core in an architecture group. In addition to that, we see how multi-directional data flow can be programmed within the Array itself. To tie it back to the architecture visualization, here is what is happening in terms of data flow:

Edge load-store units are static and completely software-controlled, allowing for deterministic kernel runtimes. Each edge has a load-store unit, unlocking novel software API possibilities such as native data rotations and data remapping.

Let’s address how the data arrived at the cores in the first place. And, more importantly, how the developer controls those functions. Data flow is an important concept when discussing any dense data algorithm running on a parallel architecture. Ensuring data reuse and minimizing data movements will lead to optimal overall algorithm performance and minimized power consumption.

Our load-store units can load into the Array or store from the Array from any side. We can load from one side at the same time we store in the other. This allows for a good deal of generalized algorithmic possibilities but let’s look at a simple case: loading data into the array from the North while storing data into OCM via the South.

qVar_t<std::int32_t> qData[OcmInOutShape::NUM_TILES];

fetchAllTiles<IteratorType::YX_NO_BORDER>(ocmInp, qData);

// Add Neighbors

for(std::int32_t tileNum = 0; tileNum < OcmInOutShape::NUM_TILES; tileNum++) {

qWest<> = qData[tileNum];

qEast<> = qData[tileNum];

qBroadcast<0, std::int32_t, BroadcastAction::POP>

if(qCol<> % 2 == 0) {

// the column of the core is even

qData[tileNum] += qEast<> * tileNum + qBroadcast<0, std::int32_t>

} else {

// the column of the core is odd

qData[tileNum] += qWest<> * tileNum + qBroadcast<1, std::int32_t>

}

}

// Flow out data

writeAllTiles<IteratorType::YX_NO_BORDER>(qData, ocmOut);fetchAllTiles is an iterator in the SDK. The basic idea is these iterators will iterate through multi-dimensional tensors with a particular convention, in this case, YX, and send that data into 2-dimensional slices that can be mapped into the Array itself. Once we have complete the compute, we have a call to writeAllTiles that does the reverse, storing the qData Array variables to the on-chip memory. An important thing to note is that the main loop, in this case, is now done OcmInOutShape::NUMTILES times, which corresponds to the number of 2D YX slices that exist in the tensor ocmInp. Our compiler takes any opportunity to overlap the previous writeAllTiles command with the subsequent fetchAllTiles command in the outer kernel loop.

This results in a visualization that looks something like this:

We have an section in the docs on iterators and Load/Store control. https://docs.quadric.io/templates/api/ocm-array.html

Large local memories offer the developer space for large data structures. Multiple ports allow for simultaneous reading and writing to keep the vortex core array busy.

Another essential thing to note is the architecture comes with a configurable size on-chip memory. The memory is configured to enable at least 1 simultaneous read and write. On the q16 Processor, the architecture instance contains an 8MB memory configured into 4 slices with 1 simultaneous read and 1 simultaneous write possible. The memory is large enough to hold data structures such as frame buffers, neural network weights, more significant intermediary dynamic memory, etc.

typedef DdrTensor<std::int32_t, 1, 1, (1 * Epu::coreDim), (3 * Epu::coreDim)> DdrInOutShape;

typedef OcmTensor<std::int32_t, 1, 1, (1 * Epu::coreDim), (3 * Epu::coreDim)> OcmInOutShape;

EPU_ENTRY void even_odd_example_int32(DdrInOutShape::ptrType ddrInpPtr,

DdrInOutShape::ptrType ddrOutPtr) {

MemAllocator ocmMem;

DdrInOutShape ddrInp(ddrInpPtr);

DdrInOutShape ddrOut(ddrOutPtr);

OcmInOutShape ocmInp;

ocmMem.allocate(ocmInp);

OcmInOutShape ocmOut;

ocmMem.allocate(ocmOut);

...

}In this example, we have some basic tensor types defined. DdrTensor will instruct the compiler to allocate memory within the off-chip external DDR interface. With the quadric Developer Kit, we’ve included 4GB of physical memory. So any tensor allocated using the DdrTensor will physically be stored in that external memory buffer. OcmTensor will instruct the compiler to allocate memory within the on-chip SRAM array. Inside of the q16 processor, we’ve included 8MB of on-chip SRAM. Any tensor of OcmTensor type will physically reside in this 8MB memory region. Here we create two tensors using those types an input tensor and an output tensor.

The broadcast bus transmits loop invariant data, such as weights and constants, to all Vortex Cores at once.

Let’s reinforce the concept of constants broadcast by building on our example. First, let’s introduce a few simple concepts before bringing them in with the rest of the code. Each cycle, we can transfer up to 8bytes worth of weight data to all cores simultaneously. Let’s say we take the example we’ve been building up and offset the odd cores and even cores, each with different constants.

qVar_t<std::int32_t> qData[OcmInOutShape::NUM_TILES];

fetchAllTiles<IteratorType::YX_NO_BORDER>(ocmInp, qData);

// do some math on every core in the Array

for(std::int32_t tileNum = 0; tileNum < OcmInOutShape::NUM_TILES; tileNum++) {

qWest<> = qData[tileNum];

qEast<> = qData[tileNum];

qBroadcast<0, std::int32_t, BroadcastAction::POP>

if(qCol<> % 2 == 0) {

// the column of the core is even

qData[tileNum] += qEast<> * tileNum + qBroadcast<0, std::int32_t>

} else {

// the column of the core is odd

qData[tileNum] += qWest<> * tileNum + qBroadcast<1, std::int32_t>

}

}The call inside the loop will instruct every core to look at the information on the 8-byte wide broadcast bus. All even Vortex Cores will take the first 4 byte offset from the bus and add it to the existing expression. While the odd Cores will take the second 4 bytes from the broadcast bus and add those.

Putting it all together

typedef DdrTensor<std::int32_t, 1, 1, (1 * Epu::coreDim), (3 * Epu::coreDim)> DdrInOutShape;

typedef OcmTensor<std::int32_t, 1, 1, (1 * Epu::coreDim), (3 * Epu::coreDim)> OcmInOutShape;

EPU_ENTRY void even_odd_example_int32(DdrInOutShape::ptrType ddrInpPtr,

DdrInOutShape::ptrType ddrOutPtr) {

MemAllocator ocmMem;

DdrInOutShape ddrInp(ddrInpPtr);

DdrInOutShape ddrOut(ddrOutPtr);

OcmInOutShape ocmInp;

ocmMem.allocate(ocmInp);

OcmInOutShape ocmOut;

ocmMem.allocate(ocmOut);

memCpy(ddrInp, ocmInp);

qVar_t<std::int32_t> qData[OcmInOutShape::NUM_TILES];

fetchAllTiles<IteratorType::YX_NO_BORDER>(ocmInp, qData);

// do some math on every core in the Array

for(std::int32_t tileNum = 0; tileNum < OcmInOutShape::NUM_TILES; tileNum++) {

qWest<> = qData[tileNum];

qEast<> = qData[tileNum];

qBroadcast<0, std::int32_t, BroadcastAction::POP>

if(qCol<> % 2 == 0) {

// the column of the core is even

qData[tileNum] += qEast<> * tileNum + qBroadcast<0, std::int32_t>

} else {

// the column of the core is odd

qData[tileNum] += qWest<> * tileNum + qBroadcast<1, std::int32_t>

}

}

// Flow out data

writeAllTiles<IteratorType::YX_NO_BORDER>(qData, ocmOut);

memCpy<OcmInOutShape, DdrInOutShape>(ocmOut, ddrOut);

}Putting it all together, we’ve constructed some basic code examples and tied them to an architecture visualization. This article is meant to connect visual concepts of the architecture with the APIs that drive it. For more detailed technical information and a complete description of the latest API release version, check out docs.quadric.io. And check back here for more entries in the “Algorithm Stories” series as we build upon the principles established here to describe and visualize more algorithms in the future.

© Copyright 2024 Quadric All Rights Reserved Privacy Policy